Apple Reveals Details on Training its New AI Models: 4 Key Insights

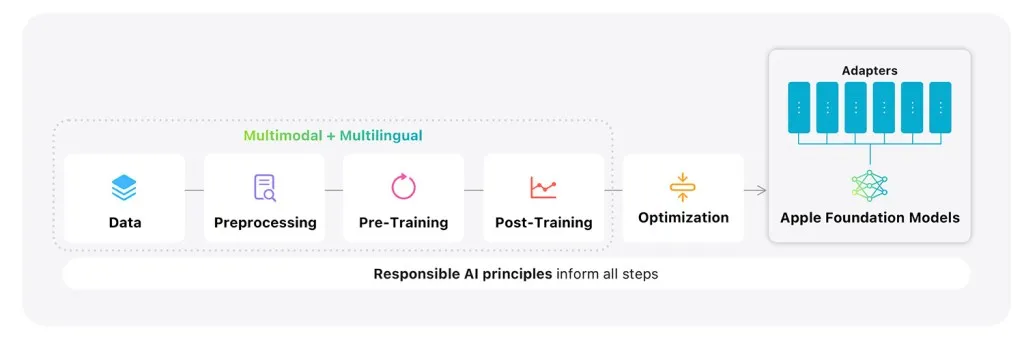

During WWDC25, Apple unveiled updated versions of its on-device and cloud-based foundation models. The company has now released a technical report outlining the training, optimization, and evaluation processes for these models, offering several intriguing behind-the-scenes details.

In a comprehensive document titled “Apple Intelligence Foundation Language Models – Tech Report 2025,” Apple covers various aspects of the new models, including their architecture, data sources, pre-training, post-training, tool use development, optimizations, and benchmarks. This highly technical, yet valuable, report provides deep insights into the models’ inner workings.

Here are some particularly noteworthy highlights from the report:

The Local Model is Divided into Two Blocks

We previously knew that Apple’s on-device model, which developers will have access to, contains approximately 3 billion parameters. The company has now clarified that this model is split into two blocks: “Block 1 contains 62.5% of the total transformer layers, while Block 2 contains the remaining 37.5% of the transformer layers, but had the key and value projections removed.”

This division means the local model requires 37.5% less memory for caching, and the time to generate the first token (a fragment of a word) is also reduced by about 37.5%. Despite this split, Apple asserts that the model’s overall performance and output quality are preserved.

It’s worth noting that a few years ago, Apple explored swapping parts of an LLM between RAM and flash storage to accommodate larger local models on devices with limited memory. While Apple ultimately chose a different approach, it demonstrates the company’s continuous experimentation to achieve strong local performance, even on memory-constrained devices.

The Cloud-Based Model Features an Innovative Architecture

For its server-side model, Apple developed a custom architecture specifically designed for its Private Cloud Compute platform, known as Parallel-Track Mixture-of-Experts (PT-MoE).

In essence, Mixture of Experts (MoE) involves splitting a large AI model into smaller subnetworks, or “experts,” which are activated only when a task is relevant to their area of expertise. For example, if a user’s prompt is about cooking, only cooking-related experts are engaged, while others remain inactive. This results in a massive overall model, but its modular design allows for faster and often more accurate responses compared to processing every prompt through a single, colossal model.

Apple built a new type of Transformer called the Parallel Track Transformer and scaled it up using Mixture of Experts (MoE) layers. Unlike traditional Transformers that process tokens sequentially through a single stack of layers, Apple’s design divides the model into multiple, parallel tracks, with each processing tokens independently and syncing only at specific points. Within each track, every other regular transformer layer is replaced with an MoE layer, activating only a few experts per token. This prevents processing bottlenecks that occur when everything needs to coordinate across the entire system.

Combined with a clever setup that balances local context with broader understanding (termed Interleaving Global and Local Attention Layers), the result is a highly modular, efficient, and scalable model that is both faster and leaner, yet still remarkably intelligent.

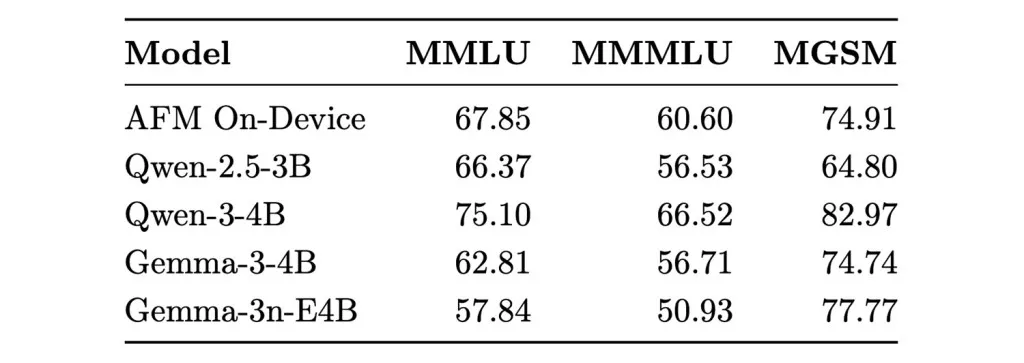

Apple Increased Multilingual Representation by 275%

One criticism of Apple Intelligence’s initial rollout was its limited language support beyond English. With its new models, Apple has expanded language capabilities, and the report details the steps taken to achieve this.

According to the document, Apple increased the amount of multilingual data used during training from 8% to 30%, incorporating both organic and synthetic content. The company also enlarged its tokenizer (the model’s token vocabulary) by 50%, expanding it from 100,000 to 150,000 different tokens. Apple states that these changes led to “significant gains” in performance across non-English benchmarks, particularly after reinforcement learning fine-tuning.

The report explains that evaluations were conducted using prompts written by native speakers (rather than translations), and the model was assessed for both accuracy and the naturalness of its responses in local contexts. This approach is consistent with a recent Apple Research study. Practically, these improvements mean that features like Writing Tools should function more reliably in the supported languages.

How Apple Sourced its Data

Similar to its earlier models, most of the training data originated from web crawling. Apple notes that its Applebot crawler respects robots.txt exclusions, meaning it will not scrape content from websites that specify such restrictions.

Here’s how Apple sourced the data for its new models:

- Publicly available web data: The largest portion of training data came from Applebot crawling web pages. Apple applied multiple layers of filtering to remove low-quality, unsafe, or irrelevant content, including spam, shallow text, and broken formatting.

- Licensed data: Apple confirmed that some training data was licensed from publishers, though it provided limited details. Earlier reports suggested negotiations with Condé Nast (The New Yorker, Vogue, Wired, etc.), NBC News, and IAC (People Magazine, The Daily Beast, and Better Homes and Gardens, etc.), indicating that some of this material likely contributed to the dataset.

- Synthetic data: Apple generated synthetic data using smaller models and custom pipelines, particularly for math, code, instruction tuning, and vision-language tasks. While the exact proportion of this data in the dataset wasn’t specified, it played a significant role in key training steps such as fine-tuning, reinforcement learning, and enhancing multilingual support.

- Visual data: For image understanding, Apple collected over 10 billion image–caption pairs, including screenshots with OCR and handwritten notes. It also used its own models to generate additional, richer captions. Previous reports of licensing discussions with Shutterstock suggest that some of their material might have been included.

9to5Mac’s View

While Apple has faced criticism regarding its perceived lag in the AI space compared to competitors, this report underscores that the company is far from stagnant. It offers valuable insight into the underlying improvements and limitations of Apple’s latest models, alongside extensive details on a privacy-conscious approach that few other companies are even attempting.

About the author : koosha Mostofi

I’m Koosha Mostofi — a multidisciplinary media creator, full-stack developer, and automation engineer, currently based in Tbilisi, Georgia. With more than two decades of professional experience, I’ve been fortunate to work at the crossroads of technology and creativity, delivering real-world solutions that are both visually engaging and technically robust.